Artificial Intelligence: When Computers Take Over

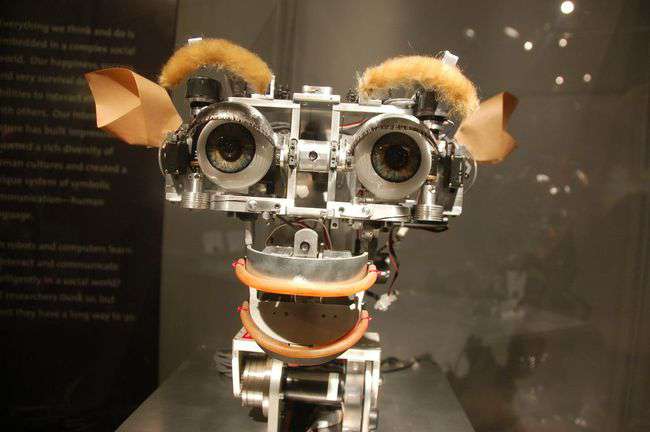

(Kismet, the MIT Robot. Source: Chris Devers )

My path to medicine and surgery came after a career switch from technology. As an undergraduate, I studied computer science. It was a passion that began in my childhood, when I used to go to school early to mess around with them. Mainly to play Boulder Dash, really. I am fairly confident it was also the only time in my life in which I actually got to school early.

I spent many years working in information security and systems and software engineering before leaving to pick up a scalpel. That passion has not faded. I have developed software to analyze and process data as part of my research efforts, and on projects independent of medicine for my own enjoyment. Since last year, I’ve been studying machine learning (ML), an exciting subset of artificial intelligence (AI) which is already changing the world in ways many people aren’t yet aware.

It has become a very hot topic in the media recently, and a few stories I’ve come across today seemed worth mentioning.

When Do the Robots Attack? #

First, the biggest misconception currently: that machines are about to gain sentience and start to function independently of humans. The sci-fi idea of the robot uprising. We’re not anywhere remotely close to that level of functioning. Geoffrey Hinton, a computer scientist who developed several of the key algorithms that enabled machine learning to advance to its current state, touched on this is a very interesting brief interview.

Hinton touches on several of the real issues currently facing ML and AI: the potential for militarization and large-scale job loss, and the next big steps.

Make no mistake: the military is very far from new to the AI space. A quick search reveals a document entitled “Machine Learning for Military Operations” from twenty years ago, sponsored by the Defense Advanced Research Projects Agency (DARPA), the governmental research arm that’s created one or two small things, like the internet. They’ve been involved in AI for decades. And there are some interesting insights out there into what they’re doing - that is, what they’re willing to share. Which I think gets at what Hinton is concerned about: what are they doing that we don’t know? With their unrivaled technological resources, the possibilities are quite extensive.

Will the Robots Take Our Jobs? #

Maybe. Another very real problem that Hinton mentions is the potential for large-scale job loss:

In a fair political system, technological advances that increase productivity would be welcomed by everyone because they would allow everyone to be better off. The technology is not the problem. The problem is a political system that doesn’t ensure the benefits accrue to everyone.

Some, most notably perhaps Elon Musk, support the idea of a universal basic income that would provide for income in the wake of large-scale job loss, with incentives for education and development of new skills.

It is hard to ignore the very real possibility that millions could be displaced from their current jobs. A recent report estimated that up to 47% of current jobs are at risk as a result of advances in AI over the next twenty years, although that number seems a bit high to me (a statement for which I have no evidence to support or refute). And the most at-risk jobs are those that already pay less, putting even more pressure on an already widening inequality gap: 83% of jobs paying under $20 per hour, versus 4% of jobs paying $40 or more per hour.

Like much of the story of AI, we just simply don’t know yet. In the past, jobs that have been lost to technological innovation have been replaced by newer, often more highly-skilled jobs.

But in order to ensure that jobs aren’t simply taken away without creating new opportunities, we have to talk about and plan for these issues now. It’s no secret within the AI field.

In a meeting between manufacturing CEOs and the White House, job loss and availability was discussed. What is very concerning in the article is the following: “Overall, however, it does not sound like automation ― whether in the manufacturing or services sectors ― was a major source of conversation.”

This is a real problem.

The technology will continue to advance, whether we talk about mitigating job loss or not. The only thing not talking about it does is open us up to a lot of potential problems down the road.

Reality of the Future of Artificial Intelligence #

If there is any one predictable thing about AI, it’s that nobody can predict what will come next. Not even people who helped create and are leading key aspects of the field, like Hinton, Andrew Ng, or Jeff Dean. The field is leaps and bounds beyond where it was just a few years ago, as major steps are made and compounded on one another. Add to that the huge predicted increases in corporate spending on AI over the next few years - from $8bn in 2016 to $47bn in 2020. Anyone who tells you they can predict where this field will be in 5-10 years’ time clearly doesn’t know enough about this field.

Rapid Change: Proof of Concept #

Late last year, the NY Times published a fantastic article about ML/AI and the inner-workings of the Google Brain team, a group that quite literally changed the state of machine translation overnight. The piece is well worth the time to get an understanding of where the field has been, is, and is going.

A few years ago, the idea of a computer translating text between two languages was laughable. Literally laughable. How many of us would enter text into Google Translate, send it through a few different languages in a digital telephone game, only to see how awful the results would be after a few passes? Even after ten years of research and hard work, these translations were often quite obviously done by a machine.

To put it into perspective: last year, that decade of work was completely outpaced by an ML model that took less than a year to train, and does a job that is at times indistinguishable from human translation. That model was developed by providing a machine previously translated texts in multiple languages - the system then used those texts to learn, on its own, how to translate from one language to another.

Translation: this is only the beginning.