Big (Computer) Brains

(Source: DeepMind PathNet)

(Source: DeepMind PathNet)

I recently wrote a few thoughts on artificial intelligence (AI), primarily in response to Geoffrey Hinton’s excellent short interview. There was one other concept in this interview that I wanted to touch on, which is the relationship between AI and our own intelligence.

In particular, Hinton concludes:

Eventually, I think the lessons we learn by applying deep learning will give us much better insight into how real neurons learn tasks, and I anticipate that this insight will have a big impact on deep learning.

To really appreciate this I think requires a very brief history lesson on the beginnings of machine learning (ML), AI, and neural networks.

In 1943, Warren McCulloch (who, funny enough, turns out to be a cousin of mine) and Walter Pitts proposed the first artificial neuron. It was known as the Threshold Logic Unit or Linear Threshold Unit. The model was designed to mimic biological neurons, where once the cumulative effect of multiple inputs reached some threshold, the neuron would fire an output. While there was some variability in the threshold of different artificial neurons, the neurons themselves did not have the ability to learn or adapt to their inputs. They either fired, or they didn’t.

This simple model was then advanced significantly in 1957 by Frank Rosenblatt. He developed an algorithm known as the perceptron, which remains the foundation for several modern ML algorithms. It had the ability to learn from its inputs, and adjust how it determined whether to output a 0 or a 1 (i.e., whether or not to fire). In short, as the algorithm saw more examples and more data, it would adjust how it calculated its predicted output to best classify data it had already seen as well as the new data. It was learning.

The perceptron model was a huge step, but it was still somewhat limited. Data provided had to possess the ability to be separated by a line or plane. The perceptron could continue running forever trying to find such a separation, which does not always exist. As a result, learning how to make more complicated decisions was not readily plausible.

Over time, this was adapted to the multilayer perceptron (MLP), which is a type of artificial neural network comprised of many perceptrons, some with different mathematical formulae. This more complicated network of neurons allows for successful classification of more advanced problems that can’t be separated by a straight line.

It also, of course, sounds closer to the structure of the brain - a complex organ composed of tens of billions of neurons, each of which is connected to thousands of other neurons - albeit on a smaller scale. But there is one nuanced difference: these neural networks must be trained with very large datasets and the resulting model is applied to only a single task. Indeed, Hinton says:

One problem we still haven’t solved is getting neural nets to generalize well from small amounts of data, and I suspect that this may require radical changes in the types of neuron we use.

A neural net trained to drive a car, for example, doesn’t know how to see cancer on a CT scan. How, then, is it that a child can see an owl one day and be told it’s an owl, and the next day see something that looks kind of similar and extrapolate that it has similar abilities? Yet, we have to train a computer on many, many more images for it to learn what an owl looks like and if you throw it a curveball, like a hawk, it is dumbfounded.

The problem here is scale.

The child may be very young and inexperienced by human standards, but her brain already has a far more complicated structure than the most advanced neural networks currently in use. She has the ability to transfer information she’s learned about one concept to another. This process of transfer learning is a challenging concept in ML.

But like many things technological, it’s only a matter of time. Moore’s law, a commonly cited concept in computing, suggests that the number of transistors that can fit on a chip doubles every eighteen to twenty-four months. It has been extrapolated by some to suggest that computing power and abilities also double in that time period. If you believe that, it stands to reason that we will very soon be able to build more and more complicated neural networks. Couple that with today’s advanced computing environments, like Google’s recently-launched Cloud Machine Learning platform, and you can see where this is going.

DeepMind, an AI company acquired by Google in 2014, recently published a fascinating paper moving in this direction.

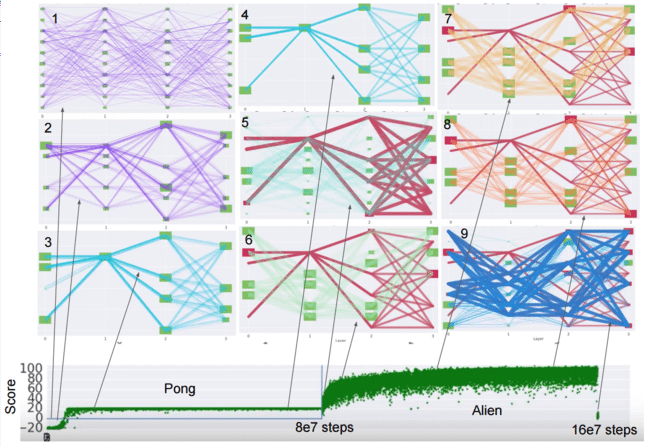

Composed of many distinct neural networks interconnected, they call it PathNet. (SkyNet, anyone?) The system learns from many different tasks, and has the ability to process data using specific subsets of its different neural network paths. Although intermediate pathways can be shared between different tasks, each task has a unique final output layer allowing for distinct decisions.

In discovering and developing its pathways, PathNet compares two pathways at a time. It determines which of the two is optimal, and then replaces the lower-performing pathway with a mutated version of the more optimal pathway. They show improved performance in learning when compared to traditional training of individual neural networks.

This is similar to our brains, in the sense that different regions are specialized for different tasks. This enables processing of similar inputs through similar pathways, and allows for optimization of resources and the creation of an organ more powerful than the sum of its individual parts. The PathNet paper concludes with:

The operations of PathNet resemble those of the Basal

Ganglia, which we propose determines which subsets of the

cortex are to be active and trainable as a function of goal/subgoal

signals from the prefrontal cortex, a hypothesis related

to others in the literature

This is a fascinating advancement that I think brings us a significant step closer in the very long journey towards true artificial generalized intelligence. It doesn’t quite yet address Hinton’s statements on being able to generalize from small amounts of data, but it’s a big step in the right direction.